In an era of unprecedented technological advancement, the integration of artificial intelligence (AI) into various industries has become a defining characteristic of the modern workplace. While AI agents offer a wide range of benefits, from automating tasks to enhancing decision-making, they also introduce a new set of challenges. One of the most pressing concerns is the appropriate level of supervision for AI agents, a topic that has sparked considerable debate and discussion.

– AI Regulations: A Balancing Act

The appropriate level of supervision for AI agents is a delicate balancing act. Too little supervision can lead to AI agents causing harm through errors, biases, and unintended consequences. However, too much supervision can stifle innovation and limit the potential benefits of AI. It is essential to find a middle ground that allows AI agents to operate with enough autonomy to be effective while still ensuring that they are safe and accountable.

| Level of Supervision | Benefits | Risks |

|---|---|---|

| No Supervision | High creativity and innovation | High risk of errors, biases, and unintended consequences |

| Full Supervision | Low risk of errors or harm | Stifles innovation and limits potential benefits |

| Human-in-the-Loop | Balance of autonomy and control | Can be time-consuming and costly |

– Ethical Considerations and Societal Impact

Ethical Considerations and Societal Impact

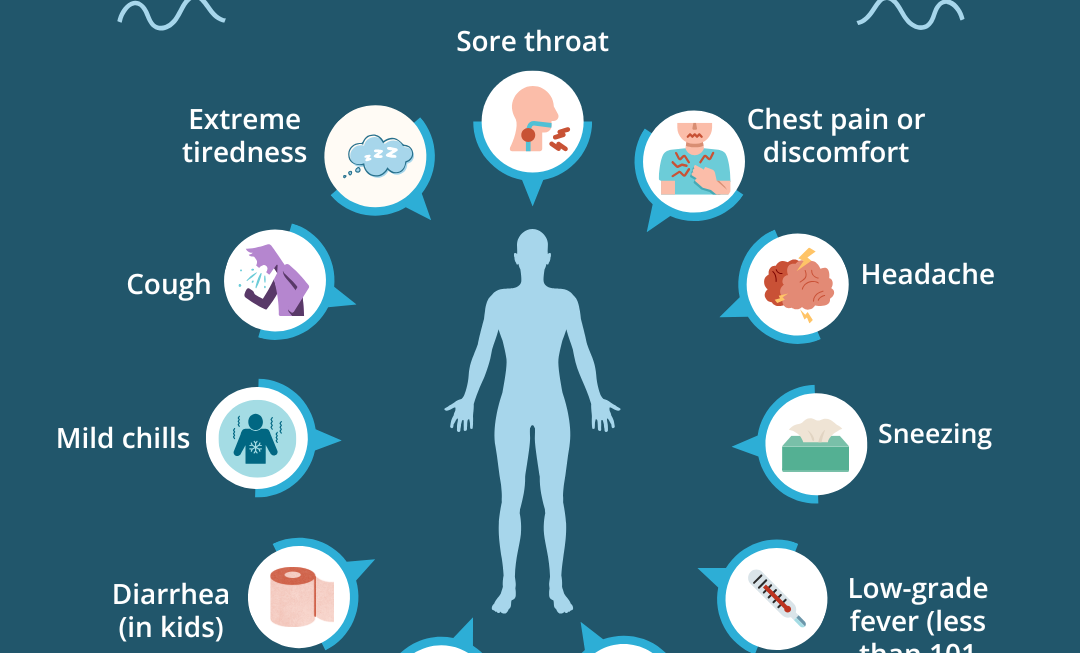

The ethical implications of AI supervision are profound. Unmonitored AI can lead to algorithmic bias, discrimination, and potential harm.Privacy concerns arise as AI collects personal data, and questions of autonomy and responsibility emerge as AI makes decisions affecting human lives. On the societal level, AI supervision impacts job displacement and the need for reskilling. The societal benefits of enhanced efficiency and productivity must be weighed against potential widening income inequality and disruption in labor force dynamics. Transparent and responsible supervision of AI agents is crucial to mitigate these risks and ensure the ethical and beneficial use of AI.

– Enhancing Trust and Transparency

to enhance trust and transparency, companies should establish clear and well-defined guidelines for AI agent supervision. These guidelines should include specific objectives, measurable performance indicators, and regular monitoring mechanisms.This will help ensure that agents are operating in a responsible and accountable manner, while minimizing the risk of unintended consequences. Additionally, companies should promote open communication about AI agent capabilities and limitations to foster greater understanding and trust among stakeholders.

Wrapping Up

As we delve deeper into the realm of artificial intelligence, the debate over the optimal level of supervision for AI agents rages on. Striking the right balance between autonomy and oversight is crucial in harnessing the transformative potential of AI while mitigating potential risks. Companies must navigate this complex landscape carefully, weighing the benefits of giving their AI systems ample room to learn and adapt against the need for responsible stewardship.

Ultimately, the path forward lies in embracing a nuanced approach, tailoring the level of supervision to the specific context and application. By fostering a culture of continuous learning and iterative betterment, companies can unlock the full potential of AI while ensuring its responsible and ethical deployment. As the future of AI unfolds, it is in this collaborative and measured pursuit of human-machine harmony that we will find the key to unlocking its transformative potential for the betterment of society.